Bolívar Enrique Solarte Pardo, Ph.D. ML & Computer Vision Researcher

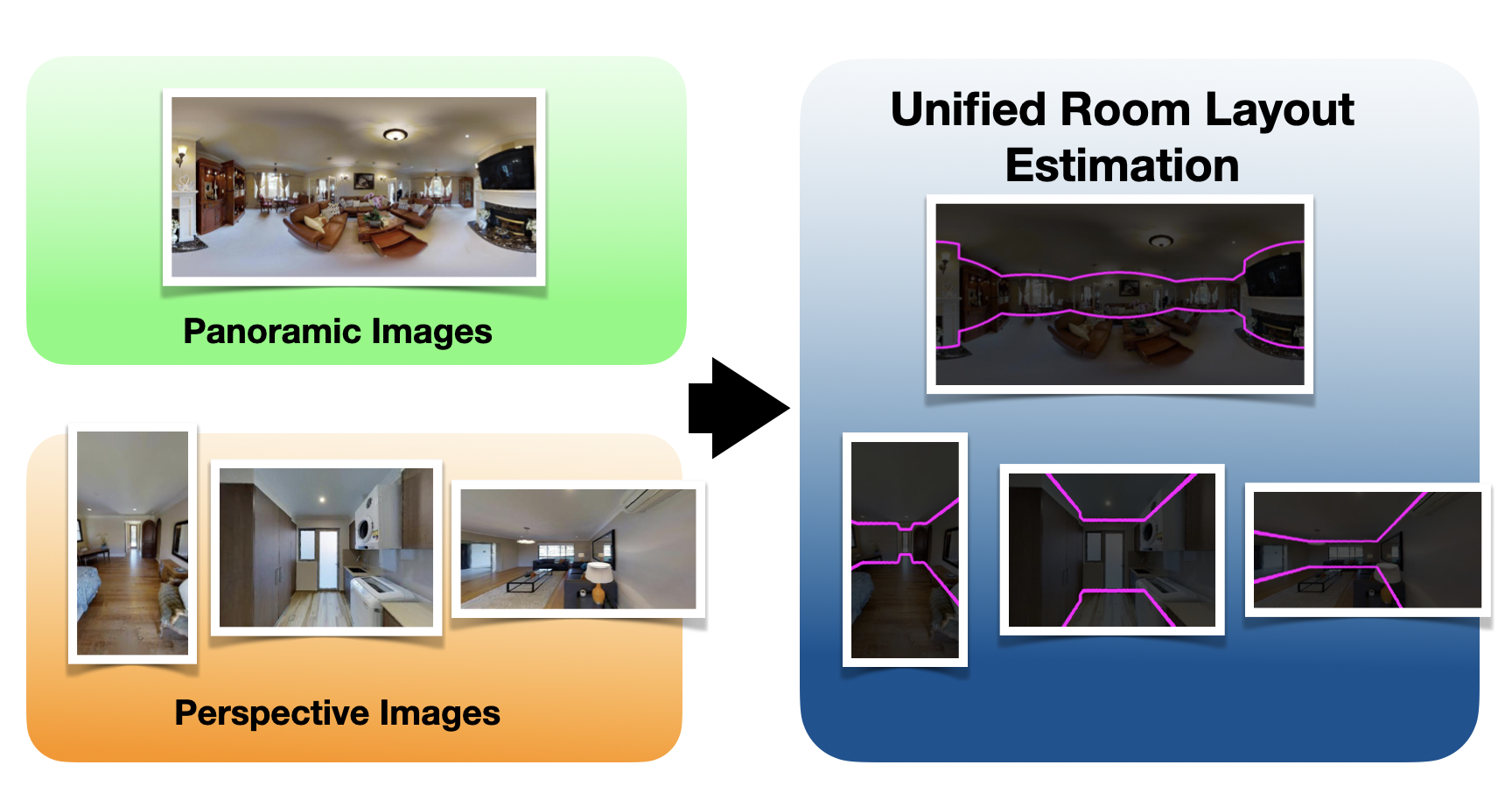

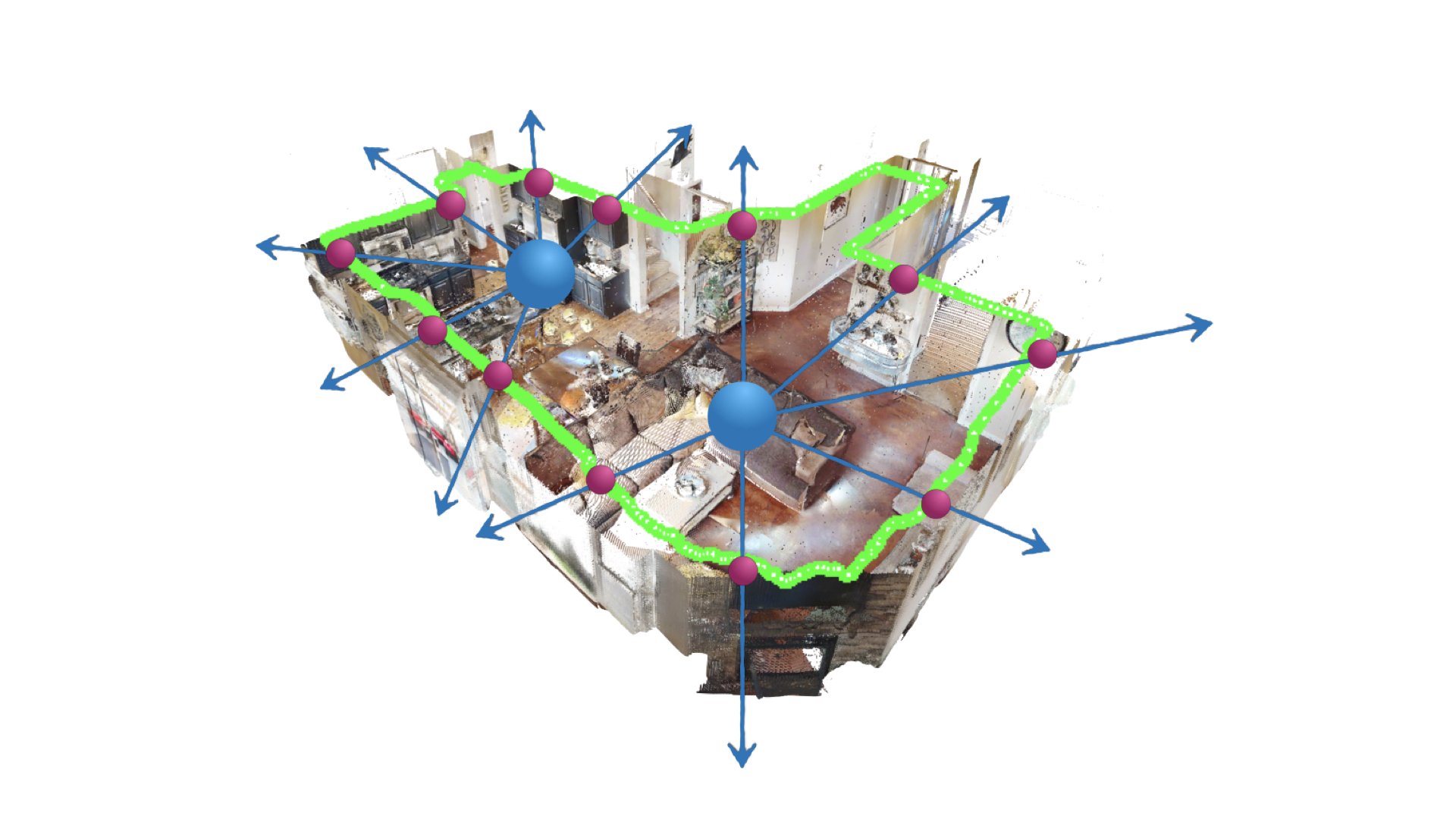

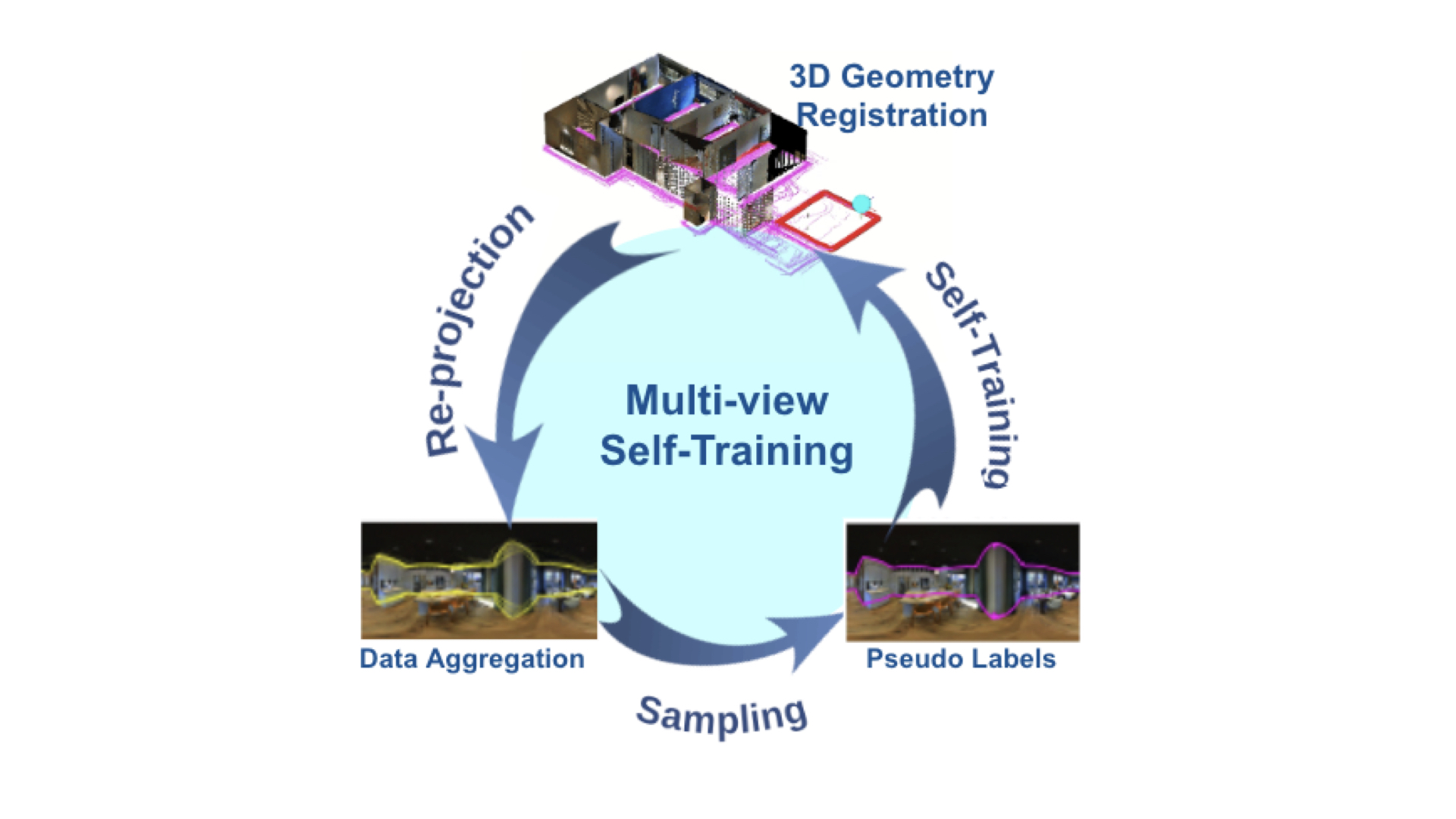

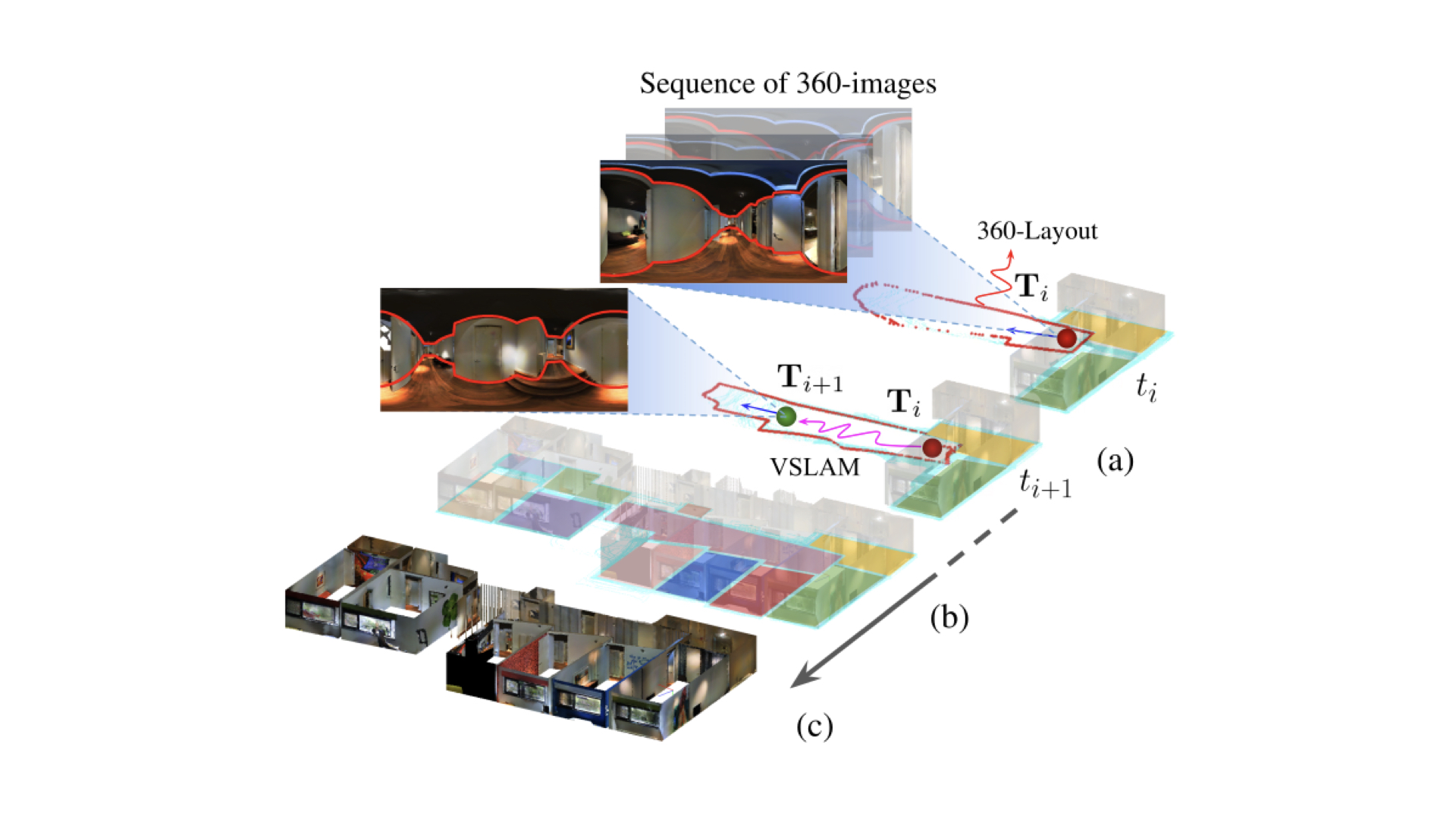

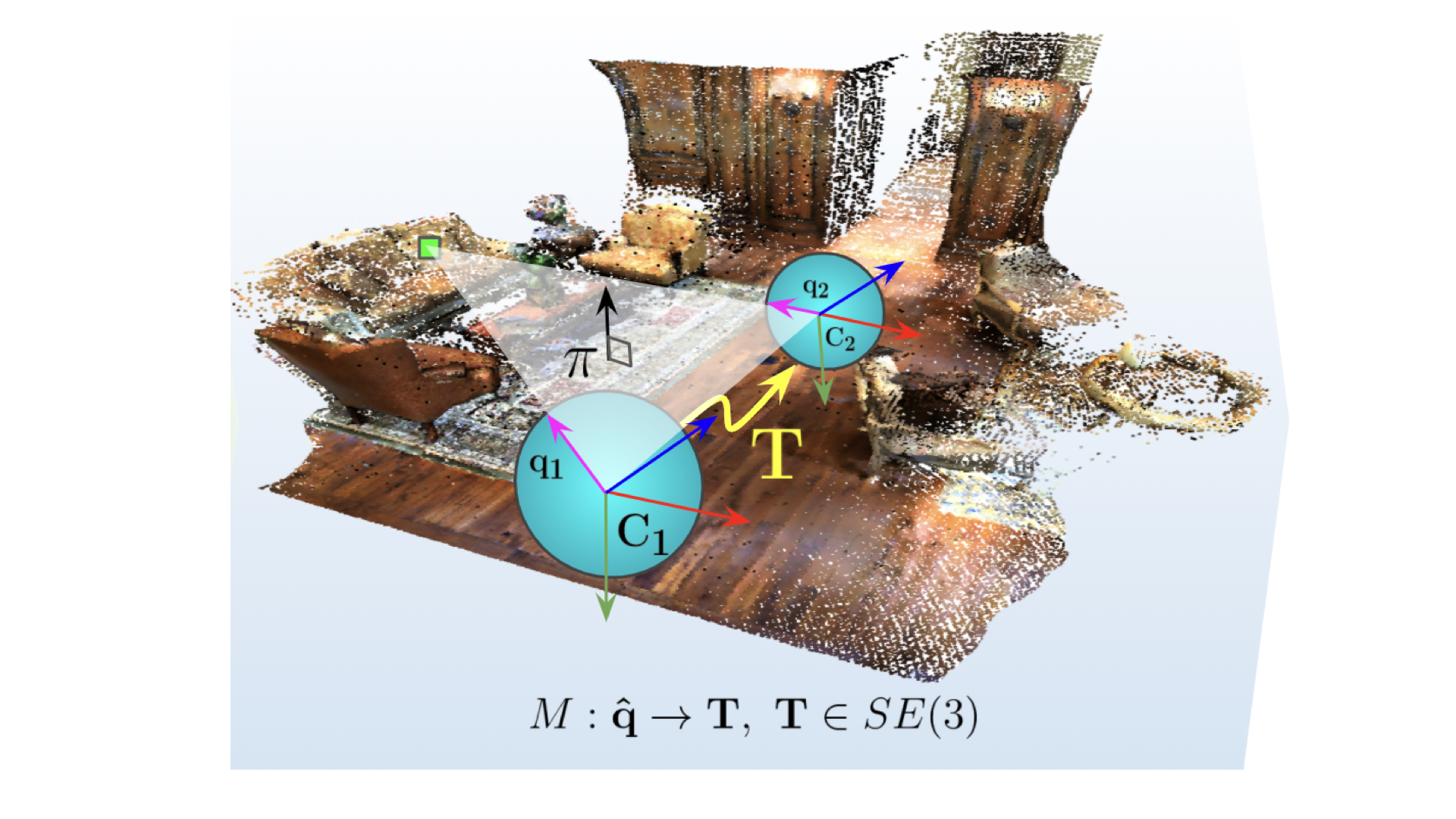

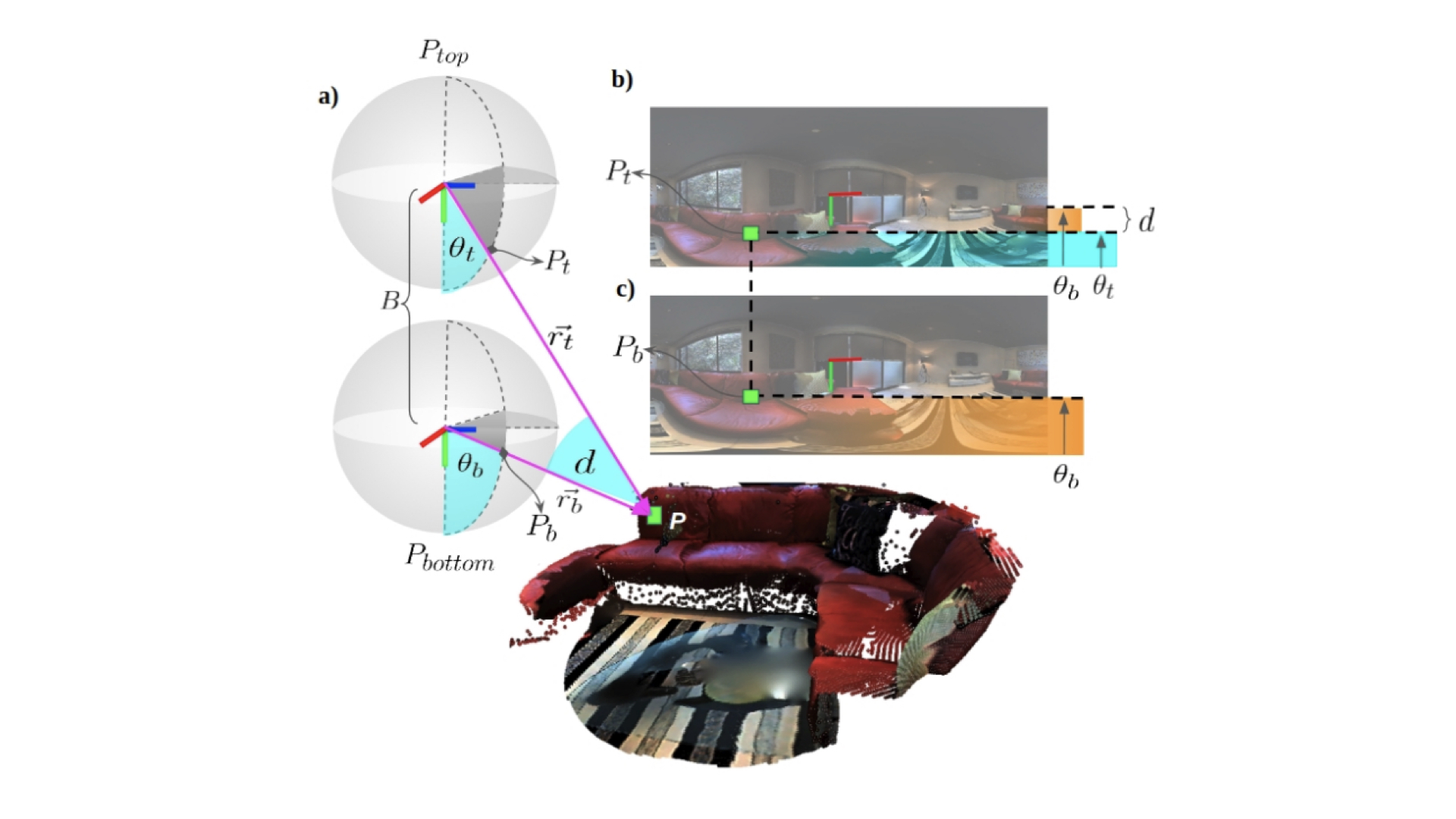

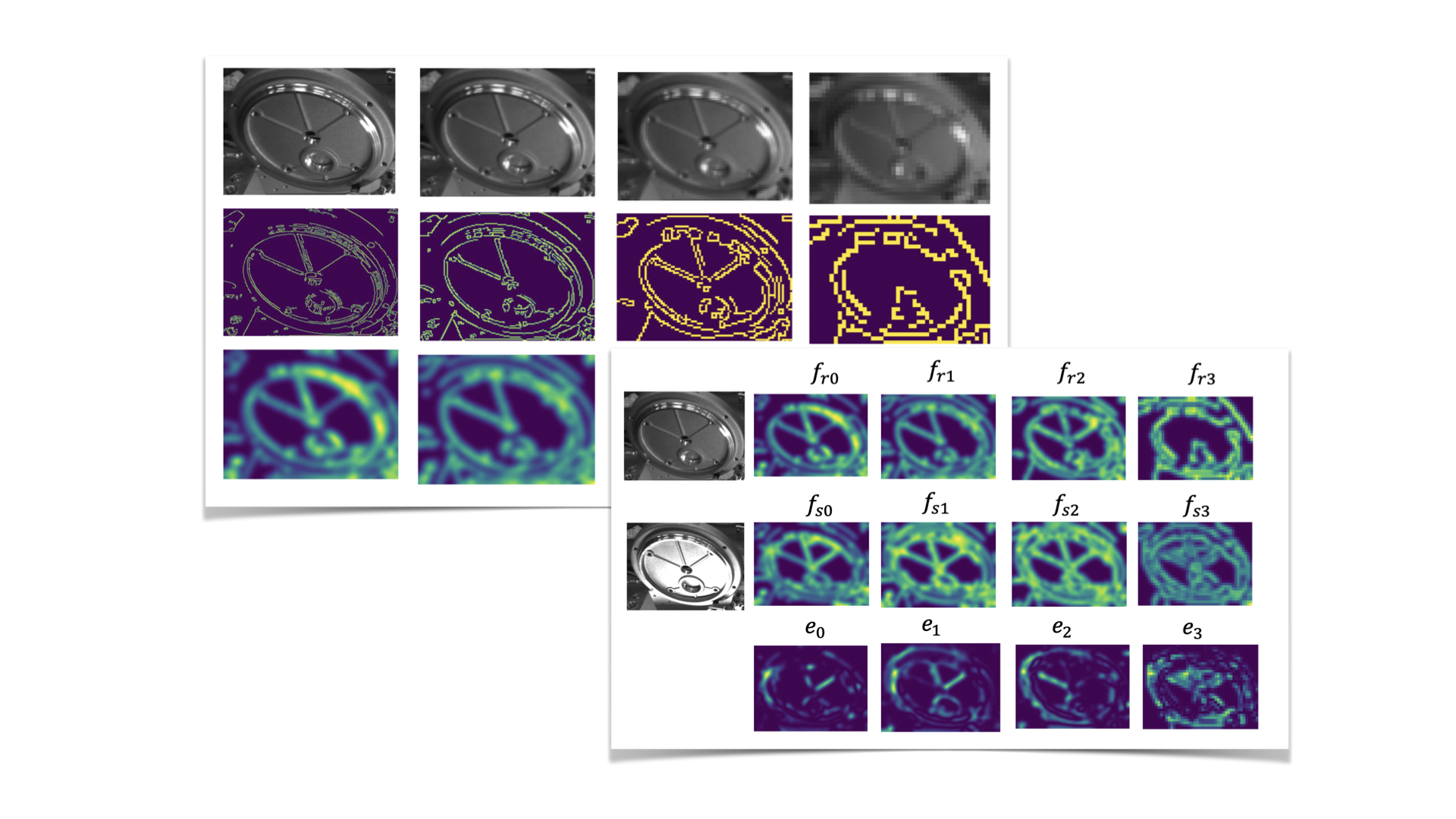

I'm a machine learning and computer vision researcher at the Industrial Technology Research Institute (ITRI) in Hsinchu, Taiwan, with 6+ years of research and engineering experience in robot perception and navigation. My work spans omnidirectional vision (fisheye and 360-degree cameras), visual SLAM, and learning-based robot reasoning with large language models (LLMs) and vision-language models (VLMs).

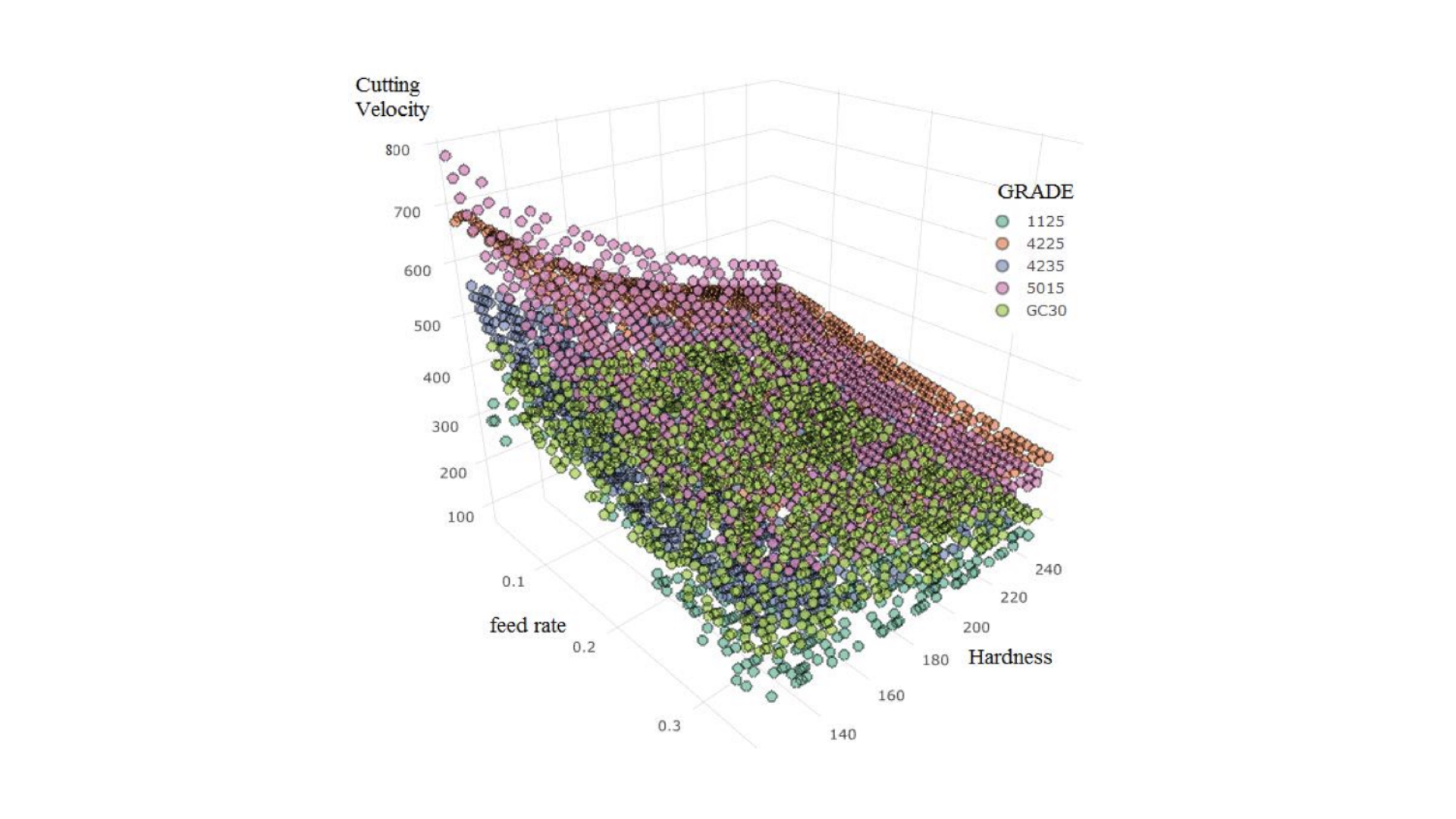

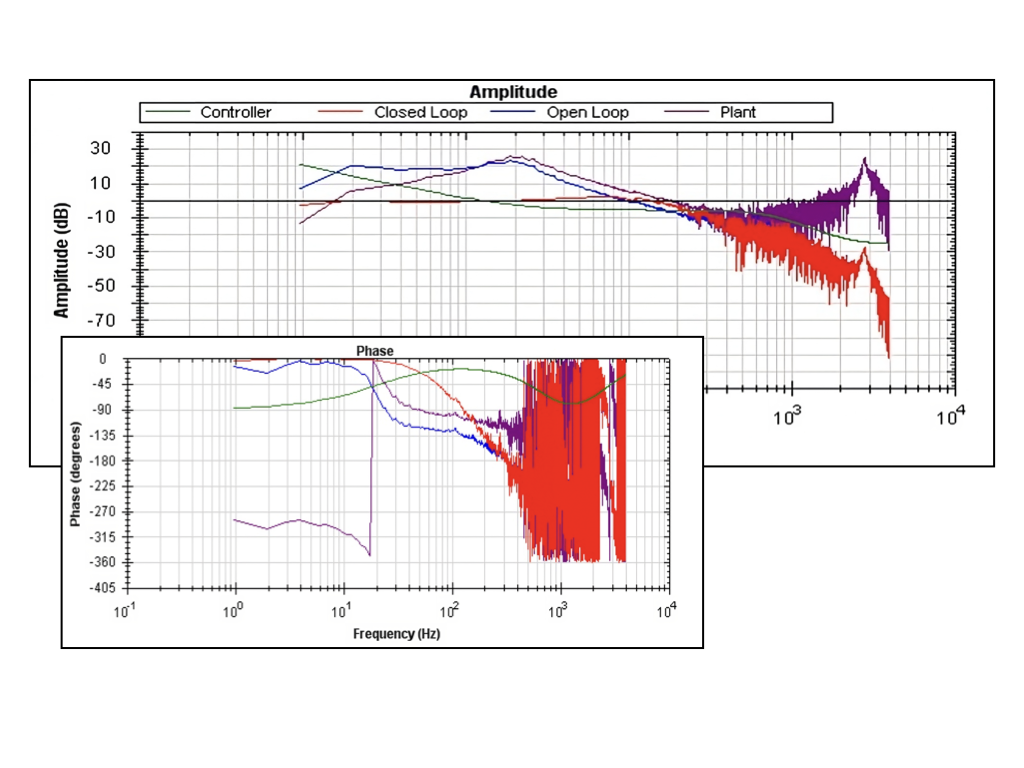

I received my Ph.D. from National Tsing Hua University (2024), advised by Prof. Sun Min, in collaboration with Prof. Wei‑Chen Chiu and Dr. Yi‑Hsuan Tsai. Previously, I completed my M.Sc. in Electrical and Electronic Engineering at National Taipei University of Technology (2019) with Prof. Syh‑Shiuh Yeh.

Based in Hsinchu, Taiwan, I’m always open to new ideas and collaborations worldwide. Please feel free to contact me at any time.